Artificial intelligence tools like generative AI and Predictive AI are rapidly transforming industries, with the potential even to expedite scientific discoveries. AI assistants can already sift through vast scientific literature, design experiments, analyse complex data, and even interpret complex laboratory setups. This vision, however, hinges on AI's ability to think like a scientist and to seamlessly integrate diverse forms of information, from visual observations to numerical measurements and theoretical frameworks. But how good are today’s AIs in imitating human thinking?

New research from Friedrich Schiller University Jena, Germany, and the Indian Institute of Technology (IIT) Delhi has introduced a comprehensive benchmark called MaCBench to test the capabilities and, more importantly, the limitations of multimodal language models (VLLMs) in chemistry and materials science.

Did You Know? Researchers are using AI to predict the properties of new chemical compounds even before they're synthesised in a lab. AI is also becoming essential just to keep up, helping researchers sift through millions of papers, experimental results, and simulations. |

The researchers found that while these advanced AI models show promising abilities in basic perception tasks, they hit significant roadblocks when faced with more complex scientific reasoning. For instance, VLLMs demonstrated near-perfect performance in identifying laboratory equipment and could extract standardised data with high accuracy. They were also good at matching hand-drawn molecules to their simplified text descriptions (SMILES strings).

However, their performance plummeted when tasks required a deeper understanding. They struggled with spatial reasoning, such as correctly describing the relationship between different parts of a molecule (like isomers) or assigning crystal systems to structures. Interpreting complex experimental results, like AFM images or mass spectrometry and nuclear magnetic resonance spectra, also proved challenging, often performing barely better than random guessing. A particularly striking finding was their difficulty in tasks requiring multiple steps of logical inference, such as ranking peak intensities in an X-ray diffraction pattern, even if they could identify the highest peak. This suggests that current AI models might be relying more on pattern matching from their training data rather than developing a true scientific understanding.

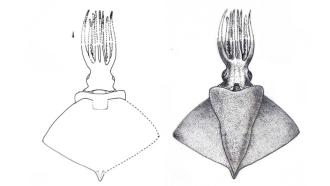

The team developed MaCBench, a benchmark designed to mirror real-world scientific workflows. Instead of creating artificial puzzles, MaCBench focuses on three core pillars of the scientific process: information extraction from scientific literature, experimental execution, and data interpretation. Within each pillar, they included a wide array of tasks. For information extraction, models had to pull data from tables and plots or interpret chemical structures. For experimental execution, tasks involved understanding laboratory safety, identifying equipment, and assessing crystal structures. The data interpretation pillar challenged models to analyse various types of scientific data, from spectral analysis to electronic structure interpretation.

The benchmark used a mix of multiple-choice and numeric-answer questions, drawing on both images mined from patents and newly generated visual data. Their benchmarking studies helped them pinpoint why models were failing, revealing that performance often degraded when tasks required flexible integration of different information types or multi-step logical thought.

MaCBench provides a holistic assessment of VLLMs across the scientific workflow, where visual and textual information must be seamlessly combined. The identified limitations are clear: current VLLMs lack robust spatial reasoning, struggle with synthesising information presented in different modalities (e.g., performing better when data is presented as text rather than an image, even if it's the same information), and falter when tasks demand sequential logical steps. The study also highlighted sensitivities to inference choices, such as how scientific terminology is used or how prompts are worded, suggesting that even minor changes can significantly impact performance. Interestingly, the researchers also observed a strong correlation between a model's performance on crystal structure tasks and the internet prominence of those structures, reinforcing the idea that models might be recalling patterns rather than truly reasoning.

Understanding these fundamental limitations is not a setback but a crucial step forward. By clearly defining where current AI models fall short, this research provides a roadmap for developing more reliable AI-powered scientific assistants and self-driving laboratories. These insights suggest that future advancements will require not just bigger models, but also better ways of curating training data and developing new training approaches that foster genuine scientific reasoning and cross-modal integration. Ultimately, by addressing these challenges, AI can move beyond being a sophisticated pattern-matcher to become a knowledgeable partner, accelerating the pace of discovery.

This article was written with the help of generative AI and edited by an editor at Research Matters.